Examples of Markov chains

A game of snakes and ladders or any other game whose moves are determined entirely by dice is a Markov chain, indeed, an absorbing Markov chain.

In the above-mentioned dice games, the only thing that matters is the current state of the board.

In a game such as blackjack, a player can gain an advantage by remembering which cards have already been shown (and hence which cards are no longer in the deck), so the next state (or hand) of the game is not independent of the past states.

Consider a random walk on the number line where, at each step, the position (call it x) may change by +1 (to the right) or −1 (to the left) with probabilities: (where c is a constant greater than 0) For example, if the constant, c, equals 1, the probabilities of a move to the left at positions x = −2,−1,0,1,2 are given by

The random walk has a centering effect that weakens as c increases.

Since the probabilities depend only on the current position (value of x) and not on any prior positions, this biased random walk satisfies the definition of a Markov chain.

Suppose that one starts with $10, and one wagers $1 on an unending, fair, coin toss indefinitely, or until all of the money is lost.

The fact that the guess is not improved by the knowledge of earlier tosses showcases the Markov property, the memoryless property of a stochastic process.

[2] Markov chose 20,000 letters from Pushkin’s Eugene Onegin, classified them into vowels and consonants, and counted the transition probabilities.

This is represented by an initial state vector in which the "sunny" entry is 100%, and the "rainy" entry is 0%: The weather on day 1 (tomorrow) can be predicted by multiplying the state vector from day 0 by the transition matrix: Thus, there is a 90% chance that day 1 will also be sunny.

[5] The steady state vector is defined as: but converges to a strictly positive vector only if P is a regular transition matrix (that is, there is at least one Pn with all non-zero entries).

[6] For the weather example, we can use this to set up a matrix equation: and since they are a probability vector we know that Solving this pair of simultaneous equations gives the steady state vector: In conclusion, in the long term about 83.3% of days are sunny.

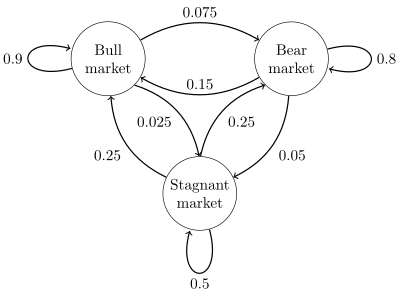

Labeling the state space {1 = bull, 2 = bear, 3 = stagnant} the transition matrix for this example is The distribution over states can be written as a stochastic row vector x with the relation x(n + 1) = x(n)P. So if at time n the system is in state x(n), then three time periods later, at time n + 3 the distribution is In particular, if at time n the system is in state 2 (bear), then at time n + 3 the distribution is Using the transition matrix it is possible to calculate, for example, the long-term fraction of weeks during which the market is stagnant, or the average number of weeks it will take to go from a stagnant to a bull market.

Assuming a sequence of independent and identically distributed input signals (for example, symbols from a binary alphabet chosen by coin tosses), if the machine is in state y at time n, then the probability that it moves to state x at time n + 1 depends only on the current state.

If one pops one hundred kernels of popcorn in an oven, each kernel popping at an independent exponentially-distributed time, then this would be a continuous-time Markov process.

denotes the number of kernels which have popped up to time t, the problem can be defined as finding the number of kernels that will pop in some later time.

The only thing one needs to know is the number of kernels that have popped prior to the time "t".