Gamma-ray burst emission mechanisms

Gamma-ray burst emission mechanisms are theories that explain how the energy from a gamma-ray burst progenitor (regardless of the actual nature of the progenitor) is turned into radiation.

Neither the light curves nor the early-time spectra of GRBs show resemblance to the radiation emitted by any familiar physical process.

GRBs vary on such short timescales (as short as milliseconds) that the size of the emitting region must be very small, or else the time delay due to the finite speed of light would "smear" the emission out in time, wiping out any short-timescale behavior.

At the energies involved in a typical GRB, so much energy crammed into such a small space would make the system opaque to photon-photon pair production, making the burst far less luminous and also giving it a very different spectrum from what is observed.

However, if the emitting system is moving towards Earth at relativistic velocities, the burst is compressed in time (as seen by an Earth observer, due to the relativistic Doppler effect) and the emitting region inferred from the finite speed of light becomes much smaller than the true size of the GRB (see relativistic beaming).

If the GRB were due to matter moving towards Earth (as the relativistic motion argument enforces), it is hard to understand why it would release its energy in such brief interludes.

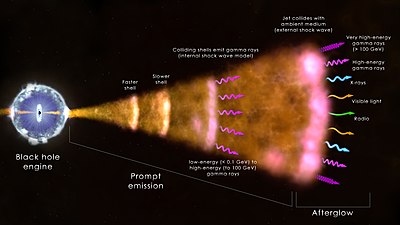

The generally accepted explanation for this is that these bursts involve the collision of multiple shells traveling at slightly different velocities; so-called "internal shocks".

[1] The collision of two thin shells flash-heats the matter, converting enormous amounts of kinetic energy into the random motion of particles, greatly amplifying the energy release due to all emission mechanisms.

Which physical mechanisms are at play in producing the observed photons is still an area of debate, but the most likely candidates appear to be synchrotron radiation and inverse Compton scattering.

A few gamma-ray bursts have shown evidence for an additional, delayed emission component at very high energies (GeV and higher).

If a GRB progenitor, such as a Wolf-Rayet star, were to explode within a stellar cluster, the resulting shock wave could generate gamma-rays by scattering photons from neighboring stars.

In general, all the ejected matter has by this time coalesced into a single shell traveling outward into the interstellar medium (or possibly the stellar wind) around the star.

At the front of this shell of matter is a shock wave referred to as the "external shock"[4] as the still relativistically moving matter ploughs into the tenuous interstellar gas or the gas surrounding the star.

As the interstellar matter moves across the shock, it is immediately heated to extreme temperatures.

These particles, now relativistically moving, encounter a strong local magnetic field and are accelerated perpendicular to the magnetic field, causing them to radiate their energy via synchrotron radiation.

Synchrotron radiation is well understood, and the afterglow spectrum has been modeled fairly successfully using this template.

For the simplest case of adiabatic expansion into a uniform-density medium, the critical parameters evolve as:

Different scalings are derived for radiative evolution and for a non-constant-density environment (such as a stellar wind), but share the general power-law behavior observed in this case.

[7][8] The twice-shocked material can produce a bright optical/UV flash, which has been seen in a few GRBs,[9] though it appears not to be a common phenomenon.

This explanation has been invoked to explain the frequent flares seen in X-rays and at other wavelengths in many bursts, though some theorists are uncomfortable with the apparent demand that the progenitor (which one would think would be destroyed by the GRB) remains active for very long.

Gamma-ray burst emission is believed to be released in jets, not spherical shells.

Second, relativistic beaming effects subside, and once Earth observers see the entire jet the widening of the relativistic beam is no longer compensated by the fact that we see a larger emitting region.

Many GRB afterglows do not display jet breaks, especially in the X-ray, but they are more common in the optical light curves.

Though as jet breaks generally occur at very late times (~1 day or more) when the afterglow is quite faint, and often undetectable, this is not necessarily surprising.

There may be dust along the line of sight from the GRB to Earth, both in the host galaxy and in the Milky Way.

At very high frequencies (far-ultraviolet and X-ray) interstellar hydrogen gas becomes a significant absorber.

In particular, a photon with a wavelength of less than 91 nanometers is energetic enough to completely ionize neutral hydrogen and is absorbed with almost 100% probability even through relatively thin gas clouds.

(At much shorter wavelengths the probability of absorption begins to drop again, which is why X-ray afterglows are still detectable.)

As a result, observed spectra of very high-redshift GRBs often drop to zero at wavelengths less than that of where this hydrogen ionization threshold (known as the Lyman break) would be in the GRB host's reference frame.

Other, less dramatic hydrogen absorption features are also commonly seen in high-z GRBs, such as the Lyman alpha forest.