Generative adversarial network

[4] The core idea of a GAN is based on the "indirect" training through the discriminator, another neural network that can tell how "realistic" the input seems, which itself is also being updated dynamically.

[5] This means that the generator is not trained to minimize the distance to a specific image, but rather to fool the discriminator.

Typically, the generator is seeded with randomized input that is sampled from a predefined latent space (e.g. a multivariate normal distribution).

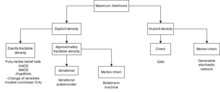

To see its significance, one must compare GAN with previous methods for learning generative models, which were plagued with "intractable probabilistic computations that arise in maximum likelihood estimation and related strategies".

[1] At the same time, Kingma and Welling[10] and Rezende et al.[11] developed the same idea of reparametrization into a general stochastic backpropagation method.

However, since the strategy sets are both not finitely spanned, the minimax theorem does not apply, and the idea of an "equilibrium" becomes delicate.

[13] Further, even if an equilibrium still exists, it can only be found by searching in the high-dimensional space of all possible neural network functions.

The standard strategy of using gradient descent to find the equilibrium often does not work for GAN, and often the game "collapses" into one of several failure modes.

Some researchers perceive the root problem to be a weak discriminative network that fails to notice the pattern of omission, while others assign blame to a bad choice of objective function.

[21] They also proposed using the Adam stochastic optimization[22] to avoid mode collapse, as well as the Fréchet inception distance for evaluating GAN performances.

The effect of using this objective is analyzed in Section 2.2.2 of Arjovsky et al.[33] Original GAN, maximum likelihood:

This naturally leads to the idea of training another network that performs "encoding", creating an autoencoder out of the encoder-generator pair.

Already in the original paper,[1] the authors noted that "Learned approximate inference can be performed by training an auxiliary network to predict

In such cases, data augmentation can be applied, to allow training GAN on smaller datasets.

is the set of four images of an arrow, pointing in 4 directions, and the data augmentation is "randomly rotate the picture by 90, 180, 270 degrees with probability

StyleGAN-2 improves upon StyleGAN-1, by using the style latent vector to transform the convolution layer's weights instead, thus solving the "blob" problem.

It also tunes the amount of data augmentation applied by starting at zero, and gradually increasing it until an "overfitting heuristic" reaches a target level, thus the name "adaptive".

[54] They analyzed the problem by the Nyquist–Shannon sampling theorem, and argued that the layers in the generator learned to exploit the high-frequency signal in the pixels they operate upon.

The resulting StyleGAN-3 is able to solve the texture sticking problem, as well as generating images that rotate and translate smoothly.

[55] This works by feeding the embeddings of the source and target task to the discriminator which tries to guess the context.

[74] GAN can be used to detect glaucomatous images helping the early diagnosis which is essential to avoid partial or total loss of vision.

[76] Concerns have been raised about the potential use of GAN-based human image synthesis for sinister purposes, e.g., to produce fake, possibly incriminating, photographs and videos.

[78] In 2019 the state of California considered[79] and passed on October 3, 2019, the bill AB-602, which bans the use of human image synthesis technologies to make fake pornography without the consent of the people depicted, and bill AB-730, which prohibits distribution of manipulated videos of a political candidate within 60 days of an election.

Both bills were authored by Assembly member Marc Berman and signed by Governor Gavin Newsom.

[88][89] A GAN, trained on a set of 15,000 portraits from WikiArt from the 14th to the 19th century, created the 2018 painting Edmond de Belamy, which sold for US$432,500.

[91] In 2020, Artbreeder was used to create the main antagonist in the sequel to the psychological web horror series Ben Drowned.

The author would later go on to praise GAN applications for their ability to help generate assets for independent artists who are short on budget and manpower.

[92][93] In May 2020, Nvidia researchers taught an AI system (termed "GameGAN") to recreate the game of Pac-Man simply by watching it being played.

[96] GANs have been used to In 1991, Juergen Schmidhuber published "artificial curiosity", neural networks in a zero-sum game.

GANs can be regarded as a case where the environmental reaction is 1 or 0 depending on whether the first network's output is in a given set.