L-estimator

The main benefits of L-estimators are that they are often extremely simple, and often robust statistics: assuming sorted data, they are very easy to calculate and interpret, and are often resistant to outliers.

However, they are inefficient, and in modern times robust statistics M-estimators are preferred, although these are much more difficult computationally.

These are both linear combinations of order statistics, and the median is therefore a simple example of an L-estimator.

Note that some of these (such as median, or mid-range) are measures of central tendency, and are used as estimators for a location parameter, such as the mean of a normal distribution, while others (such as range or trimmed range) are measures of statistical dispersion, and are used as estimators of a scale parameter, such as the standard deviation of a normal distribution.

This is defined as the fraction of the measurements which can be arbitrarily changed without causing the resulting estimate to tend to infinity (i.e., to "break down").

Not all L-estimators are robust; if it includes the minimum or maximum, then it has a breakdown point of 0.

These non-robust L-estimators include the minimum, maximum, mean, and mid-range.

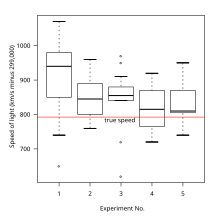

However, the simplicity of L-estimators means that they are easily interpreted and visualized, and makes them suited for descriptive statistics and statistics education; many can even be computed mentally from a five-number summary or seven-number summary, or visualized from a box plot.

L-estimators play a fundamental role in many approaches to non-parametric statistics.

The choice of L-estimator and adjustment depend on the distribution whose parameter is being estimated.

However, if the distribution has skew, symmetric L-estimators will generally be biased and require adjustment.

(using the error function) makes it an unbiased, consistent estimator for the population standard deviation if the data follow a normal distribution.

Assuming sorted data, L-estimators involving only a few points can be calculated with far fewer mathematical operations than efficient estimates.

[2][3] Before the advent of electronic calculators and computers, these provided a useful way to extract much of the information from a sample with minimal labour.

These remained in practical use through the early and mid 20th century, when automated sorting of punch card data was possible, but computation remained difficult,[2] and is still of use today, for estimates given a list of numerical values in non-machine-readable form, where data input is more costly than manual sorting.

Alternatively, they show that order statistics contain a significant amount of information.

Using a single point, this is done by taking the median of the sample, with no calculations required (other than sorting); this yields an efficiency of 64% or better (for all n).

Using two points, a simple estimate is the midhinge (the 25% trimmed mid-range), but a more efficient estimate is the 29% trimmed mid-range, that is, averaging the two values 29% of the way in from the smallest and the largest values: the 29th and 71st percentiles; this has an efficiency of about 81%.

For estimating the standard deviation of a normal distribution, the scaled interdecile range gives a reasonably efficient estimator, though instead taking the 7% trimmed range (the difference between the 7th and 93rd percentiles) and dividing by 3 (corresponding to 86% of the data of a normal distribution falling within 1.5 standard deviations of the mean) yields an estimate of about 65% efficiency.