Computer

It was not until the mid-20th century that the word acquired its modern definition; according to the Oxford English Dictionary, the first known use of the word computer was in a different sense, in a 1613 book called The Yong Mans Gleanings by the English writer Richard Brathwait: "I haue [sic] read the truest computer of Times, and the best Arithmetician that euer [sic] breathed, and he reduceth thy dayes into a short number."

The Online Etymology Dictionary indicates that the "modern use" of the term, to mean 'programmable digital electronic computer' dates from "1945 under this name; [in a] theoretical [sense] from 1937, as Turing machine".

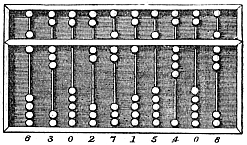

In a medieval European counting house, a checkered cloth would be placed on a table, and markers moved around on it according to certain rules, as an aid to calculating sums of money.

A combination of the planisphere and dioptra, the astrolabe was effectively an analog computer capable of working out several different kinds of problems in spherical astronomy.

The sector, a calculating instrument used for solving problems in proportion, trigonometry, multiplication and division, and for various functions, such as squares and cube roots, was developed in the late 16th century and found application in gunnery, surveying and navigation.

In the 1890s, the Spanish engineer Leonardo Torres Quevedo began to develop a series of advanced analog machines that could solve real and complex roots of polynomials,[17][18][19][20] which were published in 1901 by the Paris Academy of Sciences.

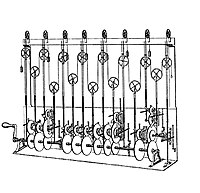

The engine would incorporate an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, making it the first design for a general-purpose computer that could be described in modern terms as Turing-complete.

Babbage's failure to complete the analytical engine can be chiefly attributed to political and financial difficulties as well as his desire to develop an increasingly sophisticated computer and to move ahead faster than anyone else could follow.

[16] The art of mechanical analog computing reached its zenith with the differential analyzer, built by H. L. Hazen and Vannevar Bush at MIT starting in 1927.

The engineer Tommy Flowers, working at the Post Office Research Station in London in the 1930s, began to explore the possible use of electronics for the telephone exchange.

Experimental equipment that he built in 1934 went into operation five years later, converting a portion of the telephone exchange network into an electronic data processing system, using thousands of vacuum tubes.

[50] During World War II, the British code-breakers at Bletchley Park achieved a number of successes at breaking encrypted German military communications.

[51][52] To crack the more sophisticated German Lorenz SZ 40/42 machine, used for high-level Army communications, Max Newman and his colleagues commissioned Flowers to build the Colossus.

Built under the direction of John Mauchly and J. Presper Eckert at the University of Pennsylvania, ENIAC's development and construction lasted from 1943 to full operation at the end of 1945.

The machine was huge, weighing 30 tons, using 200 kilowatts of electric power and contained over 18,000 vacuum tubes, 1,500 relays, and hundreds of thousands of resistors, capacitors, and inductors.

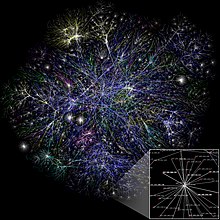

Except for the limitations imposed by their finite memory stores, modern computers are said to be Turing-complete, which is to say, they have algorithm execution capability equivalent to a universal Turing machine.

However, early junction transistors were relatively bulky devices that were difficult to manufacture on a mass-production basis, which limited them to a number of specialized applications.

[71] At the University of Manchester, a team under the leadership of Tom Kilburn designed and built a machine using the newly developed transistors instead of valves.

However, the machine did make use of valves to generate its 125 kHz clock waveforms and in the circuitry to read and write on its magnetic drum memory, so it was not the first completely transistorized computer.

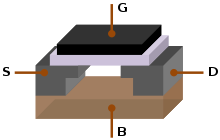

[71] With its high scalability,[81] and much lower power consumption and higher density than bipolar junction transistors,[82] the MOSFET made it possible to build high-density integrated circuits.

In turn, the planar process was based on Carl Frosch and Lincoln Derick work on semiconductor surface passivation by silicon dioxide.

[e] The control system's function is as follows— this is a simplified description, and some of these steps may be performed concurrently or in a different order depending on the type of CPU: Since the program counter is (conceptually) just another set of memory cells, it can be changed by calculations done in the ALU.

[122] The set of arithmetic operations that a particular ALU supports may be limited to addition and subtraction, or might include multiplication, division, trigonometry functions such as sine, cosine, etc., and square roots.

As data is constantly being worked on, reducing the need to access main memory (which is often slow compared to the ALU and control units) greatly increases the computer's speed.

Multiprocessor and multi-core (multiple CPUs on a single integrated circuit) personal and laptop computers are now widely available, and are being increasingly used in lower-end markets as a result.

Large computer programs consisting of several million instructions may take teams of programmers years to write, and due to the complexity of the task almost certainly contain errors.

While a person will normally read each word and line in sequence, they may at times jump back to an earlier place in the text or skip sections that are not of interest.

The following example is written in the MIPS assembly language: Once told to run this program, the computer will perform the repetitive addition task without further human intervention.

[i] Historically a significant number of other CPU architectures were created and saw extensive use, notably including the MOS Technology 6502 and 6510 in addition to the Zilog Z80.

However, in some cases they may cause the program or the entire system to "hang", becoming unresponsive to input such as mouse clicks or keystrokes, to completely fail, or to crash.