Local regression

LOESS and LOWESS thus build on "classical" methods, such as linear and nonlinear least squares regression.

They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor.

Because it is so computationally intensive, LOESS would have been practically impossible to use in the era when least squares regression was being developed.

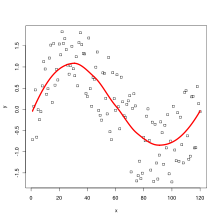

A smooth curve through a set of data points obtained with this statistical technique is called a loess curve, particularly when each smoothed value is given by a weighted quadratic least squares regression over the span of values of the y-axis scattergram criterion variable.

[6][7] Local regression and closely related procedures have a long and rich history, having been discovered and rediscovered in different fields on multiple occasions.

An early work by Robert Henderson[8] studying the problem of graduation (a term for smoothing used in Actuarial literature) introduced local regression using cubic polynomials, and showed how earlier graduation methods could be interpreted as local polynomial fitting.

William S. Cleveland and Catherine Loader (1995)[9]; and Lori Murray and David Bellhouse (2019)[10] discuss more of the historical work on graduation.

The Savitzky-Golay filter, introduced by Abraham Savitzky and Marcel J. E. Golay (1964)[11] significantly expanded the method.

Like the earlier graduation work, the focus was on data with an equally-spaced predictor variable, where (excluding boundary effects) local regression can be represented as a convolution.

Savitzky and Golay published extensive sets of convolution coefficients for different orders of polynomial and smoothing window widths.

Important contributions include Jianqing Fan and Irène Gijbels (1992)[16] studying efficiency properties, and David Ruppert and Matthew P. Wand (1994)[17] developing an asymptotic distribution theory for multivariate local regression.

This implements local linear fitting with a single predictor variable, and also introduces robustness downweighting to make the procedure resistant to outliers.

LOESS is a multivariate smoother, able to handle spatial data with two (or more) predictor variables, and uses (by default) local quadratic fitting.

Local Regression is a general term for the fitting procedure; LOWESS and LOESS are two distinct implementations.

is the unknown ‘smooth’ regression function to be estimated, and represents the conditional expectation of the response, given a value of the predictor variables.

In theoretical work, the ‘smoothness’ of this function can be formally characterized by placing bounds on higher order derivatives.

This matrix representation is crucial for studying the theoretical properties of local regression estimates.

The subsets of data used for each weighted least squares fit in LOESS are determined by a nearest neighbors algorithm.

A user-specified input to the procedure called the "bandwidth" or "smoothing parameter" determines how much of the data is used to fit each local polynomial.

Using too small a value of the smoothing parameter is not desirable, however, since the regression function will eventually start to capture the random error in the data.

The degree 0 (local constant) model is equivalent to a kernel smoother; usually credited to Èlizbar Nadaraya (1964)[22] and G. S. Watson (1964).[23].

As with bandwidth selection, methods such as cross-validation can be used to compare the fits obtained with different degrees of polynomial.

Jones (1998),[26] As discussed above, the biggest advantage LOESS has over many other methods is the process of fitting a model to the sample data does not begin with the specification of a function.

Although it is less obvious than for some of the other methods related to linear least squares regression, LOESS also accrues most of the benefits typically shared by those procedures.

It requires fairly large, densely sampled data sets in order to produce good models.

[7] Another disadvantage of LOESS is the fact that it does not produce a regression function that is easily represented by a mathematical formula.

In order to transfer the regression function to another person, they would need the data set and software for LOESS calculations.

In particular, the simple form of LOESS can not be used for mechanistic modelling where fitted parameters specify particular physical properties of a system.

Finally, as discussed above, LOESS is a computationally intensive method (with the exception of evenly spaced data, where the regression can then be phrased as a non-causal finite impulse response filter).

This article incorporates public domain material from the National Institute of Standards and Technology