Graphics pipeline

[2] Due to the dependence on specific software, hardware configurations, and desired display attributes, a universally applicable graphics pipeline does not exist.

[3] A graphics pipeline can be divided into three main parts: Application, Geometry, and Rasterization.

During the application step, changes are made to the scene as required, for example, by user interaction using input devices or during an animation.

The new scene with all its primitives, usually triangles, lines, and points, is then passed on to the next step in the pipeline.

Examples of tasks that are typically done in the application step are collision detection, animation, morphing, and acceleration techniques using spatial subdivision schemes such as Quadtrees or Octrees.

It depends on the particular implementation of how these tasks are organized as actual parallel pipeline steps.

This should meet a few conditions for the following mathematics to be easily applicable: How the unit of the coordinate system is defined, is left to the developer.

Whether, therefore, the unit vector of the system corresponds in reality to one meter or an Ångström depends on the application.

In addition, several differently transformed copies can be formed from one object, for example, a forest from a tree; This technique is called instancing.

Now we could calculate the position of the vertices of the aircraft in world coordinates by multiplying each point successively with these four matrices.

Since the multiplication of a matrix with a vector is quite expensive (time-consuming), one usually takes another path and first multiplies the four matrices together.

In matrix chaining, each transformation defines a new coordinate system, allowing for flexible extensions.

The application can then dynamically alter these matrices, such as updating the aircraft's position with each frame based on speed.

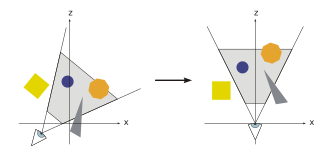

To limit the number of displayed objects, two additional clipping planes are used; The visual volume is therefore a truncated pyramid (frustum).

Maps use, for example, an orthogonal projection (so-called orthophoto), but oblique images of a landscape cannot be used in this way – although they can technically be rendered, they seem so distorted that we cannot make any use of them.

The reasons why the smallest and the greatest distance have to be given here are, on the one hand, that this distance is divided to reach the scaling of the scene (more distant objects are smaller in a perspective image than near objects), and on the other hand to scale the Z values to the range 0..1, for filling the Z-buffer.

A too-large difference between the near and the far value leads to so-called Z-fighting because of the low resolution of the Z-buffer.

In this case, a gain factor for the texture is calculated for each vertex based on the light sources and the material properties associated with the corresponding triangle.

This visual volume is defined as the inside of a frustum, a shape in the form of a pyramid with a cut-off top.

Only the – possibly clipped – primitives, which are within the visual volume, are forwarded to the final step.

On modern hardware, most of the geometry computation steps are performed in the vertex shader.

This is, in principle, freely programmable, but generally performs at least the transformation of the points and the illumination calculation.

In this stage of the graphics pipeline, the grid points are also called fragments, for the sake of greater distinctiveness.

If a fragment is visible, it can now be mixed with already existing color values in the image if transparency or multi-sampling is used.

To prevent the user from seeing the gradual rasterization of the primitives, double buffering takes place.

Modern graphics cards use a freely programmable, shader-controlled pipeline, which allows direct access to individual processing steps.

To relieve the main processor, additional processing steps have been moved to the pipeline and the GPU.

It is also possible to use a so-called compute-shader to perform any calculations off the display of a graphic on the GPU.

These universal calculations are also called general-purpose computing on graphics processing units, or GPGPU for short.

Mesh shaders are a recent addition, aiming to overcome the bottlenecks of the geometry pipeline fixed layout.