Restricted Boltzmann machine

[1] RBMs were initially proposed under the name Harmonium by Paul Smolensky in 1986,[2] and rose to prominence after Geoffrey Hinton and collaborators used fast learning algorithms for them in the mid-2000s.

RBMs have found applications in dimensionality reduction,[3] classification,[4] collaborative filtering,[5] feature learning,[6] topic modelling,[7] immunology,[8] and even many‑body quantum mechanics.

They can be trained in either supervised or unsupervised ways, depending on the task.

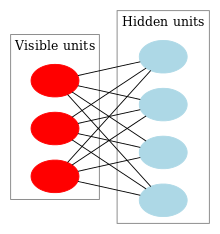

[citation needed] As their name implies, RBMs are a variant of Boltzmann machines, with the restriction that their neurons must form a bipartite graph: By contrast, "unrestricted" Boltzmann machines may have connections between hidden units.

[12] Restricted Boltzmann machines can also be used in deep learning networks.

[13] The standard type of RBM has binary-valued (Boolean) hidden and visible units, and consists of a matrix of weights

Given the weights and biases, the energy of a configuration (pair of Boolean vectors) (v,h) is defined as or, in matrix notation, This energy function is analogous to that of a Hopfield network.

As with general Boltzmann machines, the joint probability distribution for the visible and hidden vectors is defined in terms of the energy function as follows,[14] where

over all possible configurations, which can be interpreted as a normalizing constant to ensure that the probabilities sum to 1.

Since the underlying graph structure of the RBM is bipartite (meaning there are no intra-layer connections), the hidden unit activations are mutually independent given the visible unit activations.

[clarification needed] In this case, the logistic function for visible units is replaced by the softmax function where K is the number of discrete values that the visible values have.

[15][16] The graphical model of RBMs corresponds to that of factor analysis.

), or equivalently, to maximize the expected log probability of a training sample

:[15][16] The algorithm most often used to train RBMs, that is, to optimize the weight matrix

, is the contrastive divergence (CD) algorithm due to Hinton, originally developed to train PoE (product of experts) models.

[18][19] The algorithm performs Gibbs sampling and is used inside a gradient descent procedure (similar to the way backpropagation is used inside such a procedure when training feedforward neural nets) to compute weight update.

The basic, single-step contrastive divergence (CD-1) procedure for a single sample can be summarized as follows: A Practical Guide to Training RBMs written by Hinton can be found on his homepage.