Slice sampling

In order to sample X in a manner which will retain the distribution f(x), some sampling technique must be used which takes into account the varied likelihoods for each range of f(x).

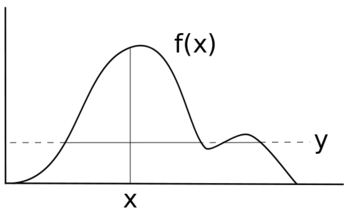

Slice sampling, in its simplest form, samples uniformly from underneath the curve f(x) without the need to reject any points, as follows: The motivation here is that one way to sample a point uniformly from within an arbitrary curve is first to draw thin uniform-height horizontal slices across the whole curve.

Then, we can sample a point within the curve by randomly selecting a slice that falls at or below the curve at the x-position from the previous iteration, then randomly picking an x-position somewhere along the slice.

By using the x-position from the previous iteration of the algorithm, in the long run we select slices with probabilities proportional to the lengths of their segments within the curve.

The most difficult part of this algorithm is finding the bounds of the horizontal slice, which involves inverting the function describing the distribution being sampled from.

This is especially problematic for multi-modal distributions, where the slice may consist of multiple discontinuous parts.

This algorithm can be used to sample from the area under any curve, regardless of whether the function integrates to 1.

In fact, scaling a function by a constant has no effect on the sampled x-positions.

This means that the algorithm can be used to sample from a distribution whose probability density function is only known up to a constant (i.e. whose normalizing constant is unknown), which is common in computational statistics.

This can be visualized as alternatively sampling the y-position and then the x-position of points under PDF, thus the Xs are from the desired distribution.

If both the PDF and its inverse are available, and the distribution is unimodal, then finding the slice and sampling from it are simple.

If not, a stepping-out procedure can be used to find a region whose endpoints fall outside the slice.

[2] Note that, in contrast to many available methods for generating random numbers from non-uniform distributions, random variates generated directly by this approach will exhibit serial statistical dependence.

If the step size is too small random walk causes slow decorrelation.

If the step size is too large there is great inefficiency due to a high rejection rate.

In contrast to Metropolis, slice sampling automatically adjusts the step size to match the local shape of the density function.

Implementation is arguably easier and more efficient than Gibbs sampling or simple Metropolis updates.

Note that, in contrast to many available methods for generating random numbers from non-uniform distributions, random variates generated directly by this approach will exhibit serial statistical dependence.

However, the generated samples are markovian, and are therefore expected to converge to the correct distribution in long run.

The rest of each iteration is dedicated to sampling an x value from the slice which is representative of the density of the region being considered.

In practice, sampling from a horizontal slice of a multimodal distribution is difficult.

There is a tension between obtaining a large sampling region and thereby making possible large moves in the distribution space, and obtaining a simpler sampling region to increase efficiency.

One option for simplifying this process is regional expansion and contraction.

→ In a Gibbs sampler, one needs to draw efficiently from all the full-conditional distributions.

If the full-conditional density is log-concave, a more efficient alternative is the application of adaptive rejection sampling (ARS) methods.

[4][5] When the ARS techniques cannot be applied (since the full-conditional is non-log-concave), the adaptive rejection Metropolis sampling algorithms are often employed.

To prevent random walk behavior, overrelaxation methods can be used to update each variable in turn.

This method adapts the univariate algorithm to the multivariate case by substituting a hyperrectangle for the one-dimensional w region used in the original.

Reflective slice sampling is a technique to suppress random walk behavior in which the successive candidate samples of distribution f(x) are kept within the bounds of the slice by "reflecting" the direction of sampling inward toward the slice once the boundary has been hit.

The dots indicate start and stopping points of a sampling walk.