Technological singularity

Prominent technologists and academics dispute the plausibility of a technological singularity and the associated artificial intelligence explosion, including Paul Allen,[13] Jeff Hawkins,[14] John Holland, Jaron Lanier, Steven Pinker,[14] Theodore Modis,[15] Gordon Moore,[14] and Roger Penrose.

This recursive self-improvement could accelerate, potentially allowing enormous qualitative change before any upper limits imposed by the laws of physics or theoretical computation set in.

[41][42] Prominent technologists and academics dispute the plausibility of a technological singularity, including Paul Allen,[13] Jeff Hawkins,[14] John Holland, Jaron Lanier, Steven Pinker,[14] Theodore Modis,[15] and Gordon Moore,[14] whose law is often cited in support of the concept.

The many speculated ways to augment human intelligence include bioengineering, genetic engineering, nootropic drugs, AI assistants, direct brain–computer interfaces and mind uploading.

But Schulman and Sandberg[47] argue that software will present more complex challenges than simply operating on hardware capable of running at human intelligence levels or beyond.

A 2017 email survey of authors with publications at the 2015 NeurIPS and ICML machine learning conferences asked about the chance that "the intelligence explosion argument is broadly correct".

In one of the first uses of the term "singularity" in the context of technological progress, Stanislaw Ulam tells of a conversation with John von Neumann about accelerating change: One conversation centered on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.

He predicts paradigm shifts will become increasingly common, leading to "technological change so rapid and profound it represents a rupture in the fabric of human history".

These threats are major issues for both singularity advocates and critics, and were the subject of Bill Joy's April 2000 Wired magazine article "Why The Future Doesn't Need Us".

This would cause massive unemployment and plummeting consumer demand, which in turn would destroy the incentive to invest in the technologies that would be required to bring about the Singularity.

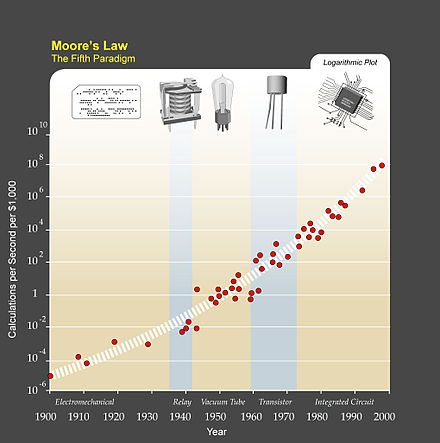

Evidence for this decline is that the rise in computer clock rates is slowing, even while Moore's prediction of exponentially increasing circuit density continues to hold.

[81] In a 2021 article, Modis pointed out that no milestones – breaks in historical perspective comparable in importance to the Internet, DNA, the transistor, or nuclear energy – had been observed in the previous twenty years while five of them would have been expected according to the exponential trend advocated by the proponents of the technological singularity.

[93] David Streitfeld in The New York Times questioned whether "it might manifest first and foremost—thanks, in part, to the bottom-line obsession of today’s Silicon Valley—as a tool to slash corporate America’s head count.

If the rise of superhuman intelligence causes a similar revolution, argues Robin Hanson, one would expect the economy to double at least quarterly and possibly on a weekly basis.

[107] Bill Hibbard (2014) harvtxt error: no target: CITEREFBill_Hibbard2014 (help) proposes an AI design that avoids several dangers including self-delusion,[108] unintended instrumental actions,[62][109] and corruption of the reward generator.

In the current stage of life's evolution, the carbon-based biosphere has generated a system (humans) capable of creating technology that will result in a comparable evolutionary transition.

In a hard takeoff scenario, an artificial superintelligence rapidly self-improves, "taking control" of the world (perhaps in a matter of hours), too quickly for significant human-initiated error correction or for a gradual tuning of the agent's goals.

[122] Max More disagrees, arguing that if there were only a few superfast[clarification needed] human-level AIs, that they would not radically change the world, as they would still depend on other people to get things done and would still have human cognitive constraints.

Kurzweil argues that the technological advances in medicine would allow us to continuously repair and replace defective components in our bodies, prolonging life to an undetermined age.

[127] Beyond merely extending the operational life of the physical body, Jaron Lanier argues for a form of immortality called "Digital Ascension" that involves "people dying in the flesh and being uploaded into a computer and remaining conscious.

"[128] A paper by Mahendra Prasad, published in AI Magazine, asserts that the 18th-century mathematician Marquis de Condorcet was the first person to hypothesize and mathematically model an intelligence explosion and its effects on humanity.

It describes a military AI computer (Golem XIV) who obtains consciousness and starts to increase his own intelligence, moving towards personal technological singularity.

Golem XIV was originally created to aid its builders in fighting wars, but as its intelligence advances to a much higher level than that of humans, it stops being interested in the military requirements because it finds them lacking internal logical consistency.

When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding.

[9][134] In 1986, Vernor Vinge published Marooned in Realtime, a science-fiction novel where a few remaining humans traveling forward in the future have survived an unknown extinction event that might well be a singularity.

In 2000, Bill Joy, a prominent technologist and a co-founder of Sun Microsystems, voiced concern over the potential dangers of robotics, genetic engineering, and nanotechnology.

[33][142] For example, Kurzweil extrapolates current technological trajectories past the arrival of self-improving AI or superhuman intelligence, which Yudkowsky argues represents a tension with both I. J.

[33] In 2009, Kurzweil and X-Prize founder Peter Diamandis announced the establishment of Singularity University, a nonaccredited private institute whose stated mission is "to educate, inspire and empower leaders to apply exponential technologies to address humanity's grand challenges.

"[143] Funded by Google, Autodesk, ePlanet Ventures, and a group of technology industry leaders, Singularity University is based at NASA's Ames Research Center in Mountain View, California.

[144][145][146] Former President of the United States Barack Obama spoke about singularity in his interview to Wired in 2016:[147] One thing that we haven't talked about too much, and I just want to go back to, is we really have to think through the economic implications.