BERT (language model)

Bidirectional encoder representations from transformers (BERT) is a language model introduced in October 2018 by researchers at Google.

As of 2020[update], BERT is a ubiquitous baseline in natural language processing (NLP) experiments.

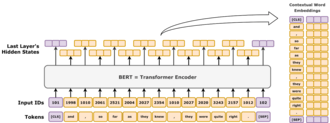

As a result of this training process, BERT learns contextual, latent representations of tokens in their context, similar to ELMo and GPT-2.

[5] It is an evolutionary step over ELMo, and spawned the study of "BERTology", which attempts to interpret what is learned by BERT.

[7] On March 11, 2020, 24 smaller models were released, the smallest being BERTTINY with just 4 million parameters.

The latent vector representation of the model is directly fed into this new module, allowing for sample-efficient transfer learning.

The tokenizer of BERT is WordPiece, which is a sub-word strategy like byte pair encoding.

The three embedding vectors are added together representing the initial token representation as a function of these three pieces of information.

A trained BERT model might be applied to word representation (like Word2Vec), where it would be run over sentences not containing any [MASK] tokens.

BERT is meant as a general pretrained model for various applications in natural language processing.

That is, after pre-training, BERT can be fine-tuned with fewer resources on smaller datasets to optimize its performance on specific tasks such as natural language inference and text classification, and sequence-to-sequence-based language generation tasks such as question answering and conversational response generation.

[12] The original BERT paper published results demonstrating that a small amount of finetuning (for BERTLARGE, 1 hour on 1 Cloud TPU) allowed it to achieved state-of-the-art performance on a number of natural language understanding tasks:[1] In the original paper, all parameters of BERT are finetuned, and recommended that, for downstream applications that are text classifications, the output token at the [CLS] input token is fed into a linear-softmax layer to produce the label outputs.

[3][16][17] Several research publications in 2018 and 2019 focused on investigating the relationship behind BERT's output as a result of carefully chosen input sequences,[18][19] analysis of internal vector representations through probing classifiers,[20][21] and the relationships represented by attention weights.

[16][17] The high performance of the BERT model could also be attributed to the fact that it is bidirectionally trained.

[22] This means that BERT, based on the Transformer model architecture, applies its self-attention mechanism to learn information from a text from the left and right side during training, and consequently gains a deep understanding of the context.

However it comes at a cost: due to encoder-only architecture lacking a decoder, BERT can't be prompted and can't generate text, while bidirectional models in general do not work effectively without the right side, thus being difficult to prompt.

However, this constitutes a dataset shift, as during training, BERT has never seen sentences with that many tokens masked out.

More sophisticated techniques allow text generation, but at a high computational cost.

[23] BERT was originally published by Google researchers Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova.

[26] Unlike previous models, BERT is a deeply bidirectional, unsupervised language representation, pre-trained using only a plain text corpus.

[4] On October 25, 2019, Google announced that they had started applying BERT models for English language search queries within the US.

DistilBERT (2019) distills BERTBASE to a model with just 60% of its parameters (66M), while preserving 95% of its benchmark scores.

ELECTRA (2020)[36] applied the idea of generative adversarial networks to the MLM task.

Its key idea is to treat the positional and token encodings separately throughout the attention mechanism.

Absolute position encoding is included in the final self-attention layer as additional input.