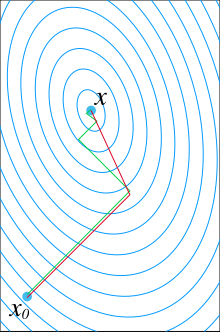

Conjugate gradient method

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive-semidefinite.

Large sparse systems often arise when numerically solving partial differential equations or optimization problems.

The conjugate gradient method can also be used to solve unconstrained optimization problems such as energy minimization.

It is commonly attributed to Magnus Hestenes and Eduard Stiefel,[1][2] who programmed it on the Z4,[3] and extensively researched it.

Despite differences in their approaches, these derivations share a common topic—proving the orthogonality of the residuals and conjugacy of the search directions.

) solves the initial problem follows from its first derivative This suggests taking the first basis vector

Seemingly, the algorithm as stated requires storage of all previous searching directions and residue vectors, as well as many matrix–vector multiplications, and thus can be computationally expensive.

[4] Restarts could slow down convergence, but may improve stability if the conjugate gradient method misbehaves, e.g., due to round-off error.

for the implicit one by the recursion subject to round-off error accumulation, and is thus recommended for an occasional evaluation.

provides a guaranteed level of accuracy both in exact arithmetic and in the presence of the rounding errors, where convergence naturally stagnates.

In practice, the exact solution is never obtained since the conjugate gradient method is unstable with respect to even small perturbations, e.g., most directions are not in practice conjugate, due to a degenerative nature of generating the Krylov subspaces.

to the exact solution and may reach the required tolerance after a relatively small (compared to the problem size) number of iterations.

This limit shows a faster convergence rate compared to the iterative methods of Jacobi or Gauss–Seidel which scale as

[5] In the last stage, the smallest attainable accuracy is reached and the convergence stalls or the method may even start diverging.

In typical scientific computing applications in double-precision floating-point format for matrices of large sizes, the conjugate gradient method uses a stopping criterion with a tolerance that terminates the iterations during the first or second stage.

In most cases, preconditioning is necessary to ensure fast convergence of the conjugate gradient method.

If any of these assumptions on the preconditioner is violated, the behavior of the preconditioned conjugate gradient method may become unpredictable.

In numerically challenging applications, sophisticated preconditioners are used, which may lead to variable preconditioning, changing between iterations.

Even if the preconditioner is symmetric positive-definite on every iteration, the fact that it may change makes the arguments above invalid, and in practical tests leads to a significant slow down of the convergence of the algorithm presented above.

The flexible version is also shown[15] to be robust even if the preconditioner is not symmetric positive definite (SPD).

in order to make them locally optimal, using the line search, steepest descent methods.

[17] In this approach, the conjugate gradient method falls out as an optimal feedback controller,

[17] The conjugate gradient method can be applied to an arbitrary n-by-m matrix by applying it to normal equations ATA and right-hand side vector ATb, since ATA is a symmetric positive-semidefinite matrix for any A.

As an iterative method, it is not necessary to form ATA explicitly in memory but only to perform the matrix–vector and transpose matrix–vector multiplications.

However the downside of forming the normal equations is that the condition number κ(ATA) is equal to κ2(A) and so the rate of convergence of CGNR may be slow and the quality of the approximate solution may be sensitive to roundoff errors.

The LSQR algorithm purportedly has the best numerical stability when A is ill-conditioned, i.e., A has a large condition number.

The conjugate gradient method with a trivial modification is extendable to solving, given complex-valued matrix A and vector b, the system of linear equations

for the complex-valued vector x, where A is Hermitian (i.e., A' = A) and positive-definite matrix, and the symbol ' denotes the conjugate transpose.

The advantages and disadvantages of the conjugate gradient methods are summarized in the lecture notes by Nemirovsky and BenTal.

In words, during the CG process, the error grows exponentially, until it suddenly becomes zero as the unique solution is found.