Speech perception

If one could identify stretches of the acoustic waveform that correspond to units of perception, then the path from sound to meaning would be clear.

The resulting acoustic structure of concrete speech productions depends on the physical and psychological properties of individual speakers.

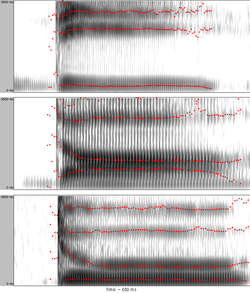

Because speakers have vocal tracts of different sizes (due to sex and age especially) the resonant frequencies (formants), which are important for recognition of speech sounds, will vary in their absolute values across individuals[8] (see Figure 3 for an illustration of this).

It has been proposed that this is achieved by means of the perceptual normalization process in which listeners filter out the noise (i.e. variation) to arrive at the underlying category.

Gradually, adding the same amount of VOT at a time, the plosive is eventually a strongly aspirated voiceless bilabial [pʰ].

The conclusion to make from both the identification and the discrimination test is that listeners will have different sensitivity to the same relative increase in VOT depending on whether or not the boundary between categories was crossed.

To probe the influence of semantic knowledge on perception, Garnes and Bond (1976) similarly used carrier sentences where target words only differed in a single phoneme (bay/day/gay, for example) whose quality changed along a continuum.

Compensatory mechanisms might even operate at the sentence level such as in learned songs, phrases and verses, an effect backed-up by neural coding patterns consistent with the missed continuous speech fragments,[20] despite the lack of all relevant bottom-up sensory input.

Different parts of language processing are impacted depending on the area of the brain that is damaged, and aphasia is further classified based on the location of injury or constellation of symptoms.

[23] Aphasia with impaired speech perception typically shows lesions or damage located in the left temporal or parietal lobes.

There are no known treatments that have been found, but from case studies and experiments it is known that speech agnosia is related to lesions in the left hemisphere or both, specifically right temporoparietal dysfunctions.

Her EEG and MRI results showed "a right cortical parietal T2-hyperintense lesion without gadolinium enhancement and with discrete impairment of water molecule diffusion".

As infants learn how to sort incoming speech sounds into categories, ignoring irrelevant differences and reinforcing the contrastive ones, their perception becomes categorical.

[29] One of the techniques used to examine how infants perceive speech, besides the head-turn procedure mentioned above, is measuring their sucking rate.

In a research study by Patricia K. Kuhl, Feng-Ming Tsao, and Huei-Mei Liu, it was discovered that if infants are spoken to and interacted with by a native speaker of Mandarin Chinese, they can actually be conditioned to retain their ability to distinguish different speech sounds within Mandarin that are very different from speech sounds found within the English language.

[36] In both children with cochlear implants and normal hearing, vowels and voice onset time becomes prevalent in development before the ability to discriminate the place of articulation.

To emulate processing patterns that would be held in the brain under normal conditions, prior knowledge is a key neural factor, since a robust learning history may to an extent override the extreme masking effects involved in the complete absence of continuous speech signals.

Behavioral experiments are based on an active role of a participant, i.e. subjects are presented with stimuli and asked to make conscious decisions about them.

[28] Methods used to measure neural responses to speech include event-related potentials, magnetoencephalography, and near infrared spectroscopy.

One important response used with event-related potentials is the mismatch negativity, which occurs when speech stimuli are acoustically different from a stimulus that the subject heard previously.

Neurophysiological methods were introduced into speech perception research for several reasons: Behavioral responses may reflect late, conscious processes and be affected by other systems such as orthography, and thus they may mask speaker's ability to recognize sounds based on lower-level acoustic distributions.

The possibility to observe low-level auditory processes independently from the higher-level ones makes it possible to address long-standing theoretical issues such as whether or not humans possess a specialized module for perceiving speech[42][43] or whether or not some complex acoustic invariance (see lack of invariance above) underlies the recognition of a speech sound.

[44] Some of the earliest work in the study of how humans perceive speech sounds was conducted by Alvin Liberman and his colleagues at Haskins Laboratories.

[48] The theory is closely related to the modularity hypothesis, which proposes the existence of a special-purpose module, which is supposed to be innate and probably human-specific.

Exemplar models of speech perception differ from the four theories mentioned above which suppose that there is no connection between word- and talker-recognition and that the variation across talkers is "noise" to be filtered out.

According to this view, listeners are inspecting the incoming signal for the so-called acoustic landmarks which are particular events in the spectrum carrying information about gestures which produced them.

Since these gestures are limited by the capacities of humans' articulators and listeners are sensitive to their auditory correlates, the lack of invariance simply does not exist in this model.

In the next stage, acoustic cues are extracted from the signal in the vicinity of the landmarks by means of mental measuring of certain parameters such as frequencies of spectral peaks, amplitudes in low-frequency region, or timing.

These are binary categories related to articulation (for example [+/- high], [+/- back], [+/- round lips] for vowels; [+/- sonorant], [+/- lateral], or [+/- nasal] for consonants.

Thus, when perceiving a speech signal our decision about what we actually hear is based on the relative goodness of the match between the stimulus information and values of particular prototypes.