F-score

In statistical analysis of binary classification and information retrieval systems, the F-score or F-measure is a measure of predictive performance.

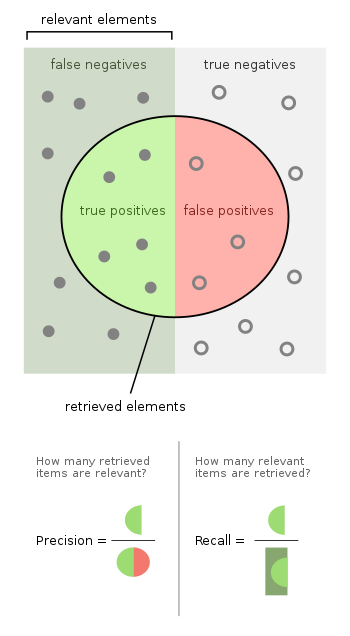

Precision is also known as positive predictive value, and recall is also known as sensitivity in diagnostic binary classification.

score applies additional weights, valuing one of precision or recall more than the other.

"measures the effectiveness of retrieval with respect to a user who attaches

[3] It is based on Van Rijsbergen's effectiveness measure Their relationship is:

This is related to the field of binary classification where recall is often termed "sensitivity".

Earlier works focused primarily on the F1 score, but with the proliferation of large scale search engines, performance goals changed to place more emphasis on either precision or recall[15] and so

[16] However, the F-measures do not take true negatives into account, hence measures such as the Matthews correlation coefficient, Informedness or Cohen's kappa may be preferred to assess the performance of a binary classifier.

[17] The F-score has been widely used in the natural language processing literature,[18] such as in the evaluation of named entity recognition and word segmentation.

[19] David Hand and others criticize the widespread use of the F1 score since it gives equal importance to precision and recall.

In other words, the relative importance of precision and recall is an aspect of the problem.

[22] According to Davide Chicco and Giuseppe Jurman, the F1 score is less truthful and informative than the Matthews correlation coefficient (MCC) in binary evaluation classification.

[23] David M W Powers has pointed out that F1 ignores the True Negatives and thus is misleading for unbalanced classes, while kappa and correlation measures are symmetric and assess both directions of predictability - the classifier predicting the true class and the true class predicting the classifier prediction, proposing separate multiclass measures Informedness and Markedness for the two directions, noting that their geometric mean is correlation.

This criticism is met by the P4 metric definition, which is sometimes indicated as a symmetrical extension of F1.

A common method is to average the F-score over each class, aiming at a balanced measurement of performance.

[27] Macro F1 is a macro-averaged F1 score aiming at a balanced performance measurement.

To calculate macro F1, two different averaging-formulas have been used: the F1 score of (arithmetic) class-wise precision and recall means or the arithmetic mean of class-wise F1 scores, where the latter exhibits more desirable properties.