Precision and recall

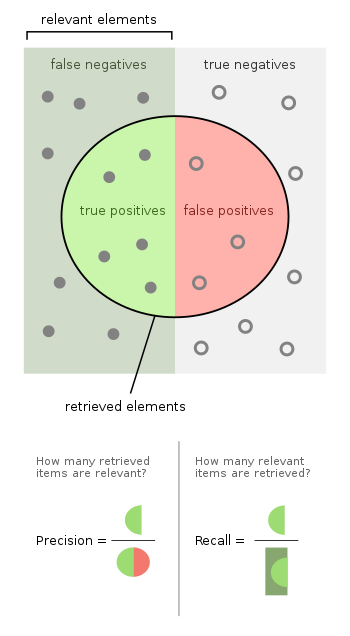

In pattern recognition, information retrieval, object detection and classification (machine learning), precision and recall are performance metrics that apply to data retrieved from a collection, corpus or sample space.

Consider a computer program for recognizing dogs (the relevant element) in a digital photograph.

Precision is related to the type I error rate, but in a slightly more complicated way, as it also depends upon the prior distribution of seeing a relevant vs. an irrelevant item.

For instance, it is possible to have perfect recall by simply retrieving every single item.

Likewise, it is possible to achieve perfect precision by selecting only a very small number of extremely likely items.

Often, there is an inverse relationship between precision and recall, where it is possible to increase one at the cost of reducing the other, but context may dictate if one is more valued in a given situation: A smoke detector is generally designed to commit many Type I errors (to alert in many situations when there is no danger), because the cost of a Type II error (failing to sound an alarm during a major fire) is prohibitively high.

As such, smoke detectors are designed with recall in mind (to catch all real danger), even while giving little weight to the losses in precision (and making many false alarms).

As such, the criminal justice system is geared toward precision (not convicting innocents), even at the cost of losses in recall (letting more guilty people go free).

The surgeon may be more liberal in the area of the brain they remove to ensure they have extracted all the cancer cells.

That is to say, greater recall increases the chances of removing healthy cells (negative outcome) and increases the chances of removing all cancer cells (positive outcome).

Greater precision decreases the chances of removing healthy cells (positive outcome) but also decreases the chances of removing all cancer cells (negative outcome).

Examples of measures that are a combination of precision and recall are the F-measure (the weighted harmonic mean of precision and recall), or the Matthews correlation coefficient, which is a geometric mean of the chance-corrected variants: the regression coefficients Informedness (DeltaP') and Markedness (DeltaP).

True Positive Rate and False Positive Rate, or equivalently Recall and 1 - Inverse Recall, are frequently plotted against each other as ROC curves and provide a principled mechanism to explore operating point tradeoffs.

Outside of Information Retrieval, the application of Recall, Precision and F-measure are argued to be flawed as they ignore the true negative cell of the contingency table, and they are easily manipulated by biasing the predictions.

However, Informedness and Markedness are Kappa-like renormalizations of Recall and Precision,[3] and their geometric mean Matthews correlation coefficient thus acts like a debiased F-measure.

For classification tasks, the terms true positives, true negatives, false positives, and false negatives compare the results of the classifier under test with trusted external judgments.

Recall in this context is also referred to as the true positive rate or sensitivity, and precision is also referred to as positive predictive value (PPV); other related measures used in classification include true negative rate and accuracy.

For example, in medical diagnosis, a false positive test can lead to unnecessary treatment and expenses.

In other cases, the cost of a false negative is high, and recall may be a more valuable metric.

The probabilistic interpretation allows to easily derive how a no-skill classifier would perform.

For example, balanced accuracy[16] (bACC) normalizes true positive and true negative predictions by the number of positive and negative samples, respectively, and divides their sum by two:

For the previous example (95 negative and 5 positive samples), classifying all as negative gives 0.5 balanced accuracy score (the maximum bACC score is one), which is equivalent to the expected value of a random guess in a balanced data set.

Balanced accuracy can serve as an overall performance metric for a model, whether or not the true labels are imbalanced in the data, assuming the cost of FN is the same as FP.

Another metric is the predicted positive condition rate (PPCR), which identifies the percentage of the total population that is flagged.

In such scenarios, ROC plots may be visually deceptive with respect to conclusions about the reliability of classification performance.

[19] The weighting procedure relates the confusion matrix elements to the support set of each considered class.

There are several reasons that the F-score can be criticized, in particular circumstances, due to its bias as an evaluation metric.

There are other parameters and strategies for performance metric of information retrieval system, such as the area under the ROC curve (AUC)[20] or pseudo-R-squared.

Precision and recall values can also be calculated for classification problems with more than two classes.

The class-wise precision and recall values can then be combined into an overall multi-class evaluation score, e.g., using the macro F1 metric.