LeNet

LeNet is a series of convolutional neural network structure proposed by LeCun et al.[1] The earliest version, LeNet-1, was trained in 1989.

[2] In 1988, LeCun joined the Adaptive Systems Research Department at AT&T Bell Laboratories in Holmdel, New Jersey, United States, headed by Lawrence D. Jackel.

In 1988, LeCun et al. published a neural network design that recognize handwritten zip code.

[3] In 1989, Yann LeCun et al. at Bell Labs first applied the backpropagation algorithm to practical applications, and believed that the ability to learn network generalization could be greatly enhanced by providing constraints from the task's domain.

They only performed minimal preprocessing on the data, and the model was carefully designed for this task and it was highly constrained.

[8] By 1998 Yann LeCun, Leon Bottou, Yoshua Bengio, and Patrick Haffner were able to provide examples of practical applications of neural networks, such as two systems for recognizing handwritten characters online and models that could read millions of checks per day.

[1] The research achieved great success and aroused the interest of scholars in the study of neural networks.

The dataset was 9298 grayscale images, digitized from handwritten zip codes that appeared on U.S. mail passing through the Buffalo, New York post office.

[4] After the development of LeNet-1, as a demonstration for real-time application, they loaded the neural network into a AT&T DSP-32C digital signal processor[13] with a peak performance of 12.5 million multiply-add operations per second.

Later, NCR deployed a similar system in large cheque reading machines in bank back offices.

[1] But it was not popular at that time because of the lack of hardware, especially since GPUs and other algorithms, such as SVM, could achieve similar effects or even exceed LeNet.

A three-layer tree architecture imitating LeNet-5 and consisting of only one convolutional layer, has achieved a similar success rate on the CIFAR-10 dataset.

[15] Increasing the number of filters for the LeNet architecture results in a power law decay of the error rate.

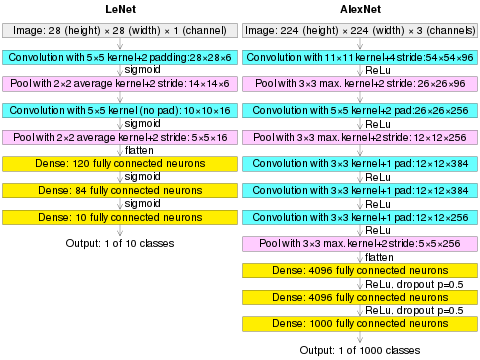

(AlexNet image size should be 227×227×3, instead of 224×224×3, so the math will come out right. The original paper said different numbers, but Andrej Karpathy, the former head of computer vision at Tesla, said it should be 227×227×3 (he said Alex didn't describe why he put 224×224×3). The next convolution should be 11×11 with stride 4: 55×55×96 (instead of 54×54×96). It would be calculated, for example, as: [(input width 227 - kernel width 11) / stride 4] + 1 = [(227 - 11) / 4] + 1 = 55. Since the kernel output is the same length as width, its area is 55×55.)