Multivariate kernel density estimation

It can be viewed as a generalisation of histogram density estimation with improved statistical properties.

Apart from histograms, other types of density estimators include parametric, spline, wavelet and Fourier series.

Kernel density estimators were first introduced in the scientific literature for univariate data in the 1950s and 1960s[1][2] and subsequently have been widely adopted.

Based on research carried out in the 1990s and 2000s, multivariate kernel density estimation has reached a level of maturity comparable to its univariate counterparts.

This requires the choice of an anchor point (the lower left corner of the histogram grid).

[6] One possible solution to this anchor point placement problem is to remove the histogram binning grid completely.

In the left figure below, a kernel (represented by the grey lines) is centred at each of the 50 data points above.

The most striking difference between kernel density estimates and histograms is that the former are easier to interpret since they do not contain artifices induced by a binning grid.

Aggregating the individually smoothed contributions gives an overall picture of the structure of the data and its density function.

In the details to follow, we show that this approach leads to a reasonable estimate of the underlying density function.

The previous figure is a graphical representation of kernel density estimate, which we now define in an exact manner.

Let x1, x2, ..., xn be a sample of d-variate random vectors drawn from a common distribution described by the density function ƒ.

On the other hand, the choice of the bandwidth matrix H is the single most important factor affecting its accuracy since it controls the amount and orientation of smoothing induced.

[7][8] The most commonly used optimality criterion for selecting a bandwidth matrix is the MISE or mean integrated squared error This in general does not possess a closed-form expression, so it is usual to use its asymptotic approximation (AMISE) as a proxy where The quality of the AMISE approximation to the MISE[3]: 97 is given by where o indicates the usual small o notation.

Heuristically this statement implies that the AMISE is a 'good' approximation of the MISE as the sample size n → ∞.

It can be shown that any reasonable bandwidth selector H has H = O(n−2/(d+4)) where the big O notation is applied elementwise.

[9][10] These references also contain algorithms on optimal estimation of the pilot bandwidth matrix G and establish that

[10][11] These references also contain algorithms on optimal estimation of the pilot bandwidth matrix G and establish that

Using the standard mean squared value decomposition we have that the MSE tends to 0, implying that the kernel density estimator is (mean square) consistent and hence converges in probability to the true density f. The rate of convergence of the MSE to 0 is the necessarily the same as the MISE rate noted previously O(n−4/(d+4)), hence the convergence rate of the density estimator to f is Op(n−2/(d+4)) where Op denotes order in probability.

This dataset (included in the base distribution of R) contains 272 records with two measurements each: the duration time of an eruption (minutes) and the waiting time until the next eruption (minutes) of the Old Faithful Geyser in Yellowstone National Park, USA.

The code fragment computes the kernel density estimate with the plug-in bandwidth matrix

The routine is an automatic bandwidth selection method specifically designed for a second order Gaussian kernel.

[14] The figure shows the joint density estimate that results from using the automatically selected bandwidth.

There are alternative optimality criteria, which attempt to cover cases where MISE is not an appropriate measure.

[4]: 34–37, 78 The equivalent L1 measure, Mean Integrated Absolute Error, is Its mathematical analysis is considerably more difficult than the MISE ones.

[16] Likelihood error criteria include those based on the Mean Kullback–Leibler divergence and the Mean Hellinger distance The KL can be estimated using a cross-validation method, although KL cross-validation selectors can be sub-optimal even if it remains consistent for bounded density functions.

[19] Recent research has shown that the kernel and its bandwidth can both be optimally and objectively chosen from the input data itself without making any assumptions about the form of the distribution.

[20] The resulting kernel density estimate converges rapidly to the true probability distribution as samples are added: at a rate close to the

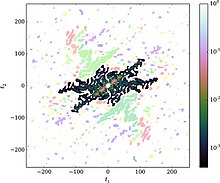

However, it has been found that the ECF can be approximated accurately using a non-uniform fast Fourier transform (nuFFT) method,[21][22] which increases the calculation speed by several orders of magnitude (depending on the dimensionality of the problem).

The combination of this objective KDE method and the nuFFT-based ECF approximation has been referred to as fastKDE in the literature.