RGB color model

RGB is a device-dependent color model: different devices detect or reproduce a given RGB value differently, since the color elements (such as phosphors or dyes) and their response to the individual red, green, and blue levels vary from manufacturer to manufacturer, or even in the same device over time.

Typical RGB output devices are TV sets of various technologies (CRT, LCD, plasma, OLED, quantum dots, etc.

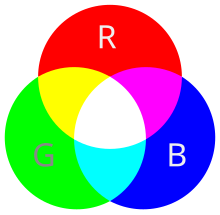

There is no common color component among magenta, cyan and yellow, thus rendering a spectrum of zero intensity: black.

The choice of primary colors is related to the physiology of the human eye; good primaries are stimuli that maximize the difference between the responses of the cone cells of the human retina to light of different wavelengths, and that thereby make a large color triangle.

The first experiments with RGB in early color photography were made in 1861 by Maxwell himself, and involved the process of combining three color-filtered separate takes.

Color photography by taking three separate plates was used by other pioneers, such as the Russian Sergey Prokudin-Gorsky in the period 1909 through 1915.

Before the development of practical electronic TV, there were patents on mechanically scanned color systems as early as 1889 in Russia.

[12][13][14] More recently, color wheels have been used in field-sequential projection TV receivers based on the Texas Instruments monochrome DLP imager.

The modern RGB shadow mask technology for color CRT displays was patented by Werner Flechsig in Germany in 1938.

The first manufacturer of a truecolor graphics card for PCs (the TARGA) was Truevision in 1987, but it was not until the arrival of the Video Graphics Array (VGA) in 1987 that RGB became popular, mainly due to the analog signals in the connection between the adapter and the monitor which allowed a very wide range of RGB colors.

At common viewing distance, the separate sources are indistinguishable, which the eye interprets as a given solid color.

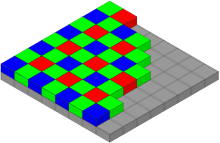

During digital image processing each pixel can be represented in the computer memory or interface hardware (for example, a graphics card) as binary values for the red, green, and blue color components.

Outside Europe, RGB is not very popular as a video signal format; S-Video takes that spot in most non-European regions.

Driven by software, the CPU (or other specialized chips) write the appropriate bytes into the video memory to define the image.

Of course, before displaying, the CLUT has to be loaded with R, G, and B values that define the palette of colors required for each image to be rendered.

Some video applications store such palettes in PAL files (Age of Empires game, for example, uses over half-a-dozen[18]) and can combine CLUTs on screen.

With this system, 16,777,216 (2563 or 224) discrete combinations of R, G, and B values are allowed, providing millions of different (though not necessarily distinguishable) hue, saturation and lightness shades.

Increased shading has been implemented in various ways, some formats such as .png and .tga files among others using a fourth grayscale color channel as a masking layer, often called RGB32.

Similarly, the intensity of the output on TV and computer display devices is not directly proportional to the R, G, and B applied electric signals (or file data values which drive them through digital-to-analog converters).

On a typical standard 2.2-gamma CRT display, an input intensity RGB value of (0.5, 0.5, 0.5) only outputs about 22% of full brightness (1.0, 1.0, 1.0), instead of 50%.

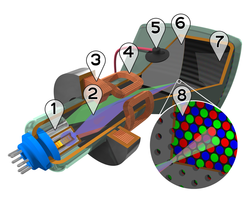

With the arrival of commercially viable charge-coupled device (CCD) technology in the 1980s, first, the pickup tubes were replaced with this kind of sensor.

Later, higher scale integration electronics was applied (mainly by Sony), simplifying and even removing the intermediate optics, thereby reducing the size of home video cameras and eventually leading to the development of full camcorders.

Photographic digital cameras that use a CMOS or CCD image sensor often operate with some variation of the RGB model.

Also, other processes used to be applied in order to map the camera RGB measurements into a standard color space as sRGB.

Due to heating problems, the worst of them being the potential destruction of the scanned film, this technology was later replaced by non-heating light sources such as color LEDs.

These ranges may be quantified in several different ways: For example, brightest saturated red is written in the different RGB notations as: In many environments, the component values within the ranges are not managed as linear (that is, the numbers are nonlinearly related to the intensities that they represent), as in digital cameras and TV broadcasting and receiving due to gamma correction, for example.

The main characteristic of all of them is the quantization of the possible values per component (technically a sample) by using only integer numbers within some range, usually from 0 to some power of two minus one (2n − 1) to fit them into some bit groupings.

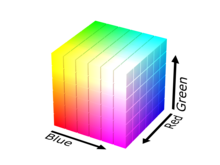

An RGB triplet (r,g,b) represents the three-dimensional coordinate of the point of the given color within the cube or its faces or along its edges.

[33] All luminance–chrominance formats used in the different TV and video standards such as YIQ for NTSC, YUV for PAL, YDBDR for SECAM, and YPBPR for component video use color difference signals, by which RGB color images can be encoded for broadcasting/recording and later decoded into RGB again to display them.

The use of YCBCR also allows computers to perform lossy subsampling with the chrominance channels (typically to 4:2:2 or 4:1:1 ratios), which reduces the resultant file size.