Real number

[b][1] The real numbers are fundamental in calculus (and in many other branches of mathematics), in particular by their role in the classical definitions of limits, continuity and derivatives.

A current axiomatic definition is that real numbers form the unique (up to an isomorphism) Dedekind-complete ordered field.

See Construction of the real numbers for details about these formal definitions and the proof of their equivalence.

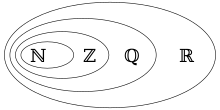

The identifications consist of not distinguishing the source and the image of each injective homomorphism, and thus to write These identifications are formally abuses of notation (since, formally, a rational number is an equivalence class of pairs of integers, and a real number is an equivalence class of Cauchy series), and are generally harmless.

For a number x whose decimal representation extends k places to the left, the standard notation is the juxtaposition of the digits

In summary, there is a bijection between the real numbers and the decimal representations that do not end with infinitely many trailing 9.

More formally, the reals are complete (in the sense of metric spaces or uniform spaces, which is a different sense than the Dedekind completeness of the order in the previous section): A sequence (xn) of real numbers is called a Cauchy sequence if for any ε > 0 there exists an integer N (possibly depending on ε) such that the distance |xn − xm| is less than ε for all n and m that are both greater than N. This definition, originally provided by Cauchy, formalizes the fact that the xn eventually come and remain arbitrarily close to each other.

The completeness property of the reals is the basis on which calculus, and more generally mathematical analysis, are built.

For example, the standard series of the exponential function converges to a real number for every x, because the sums can be made arbitrarily small (independently of M) by choosing N sufficiently large.

The real numbers are often described as "the complete ordered field", a phrase that can be interpreted in several ways.

The real numbers form a metric space: the distance between x and y is defined as the absolute value |x − y|.

There are various properties that uniquely specify them; for instance, all unbounded, connected, and separable order topologies are necessarily homeomorphic to the reals.

If V=L is assumed in addition to the axioms of ZF, a well ordering of the real numbers can be shown to be explicitly definable by a formula.

The concept of irrationality was implicitly accepted by early Indian mathematicians such as Manava (c. 750–690 BC), who was aware that the square roots of certain numbers, such as 2 and 61, could not be exactly determined.

[7] Around 500 BC, the Greek mathematicians led by Pythagoras also realized that the square root of 2 is irrational.

Two lengths are "commensurable", if there is a unit in which they are both measured by integers, that is, in modern terminology, if their ratio is a rational number.

Eudoxus of Cnidus (c. 390−340 BC) provided a definition of the equality of two irrational proportions in a way that is similar to Dedekind cuts (introduced more than 2,000 years later), except that he did not use any arithmetic operation other than multiplication of a length by a natural number (see Eudoxus of Cnidus).

[10] In Europe, such numbers, not commensurable with the numerical unit, were called irrational or surd ("deaf").

In the 16th century, Simon Stevin created the basis for modern decimal notation, and insisted that there is no difference between rational and irrational numbers in this regard.

In the 17th century, Descartes introduced the term "real" to describe roots of a polynomial, distinguishing them from "imaginary" numbers.

[12] Liouville (1840) showed that neither e nor e2 can be a root of an integer quadratic equation, and then established the existence of transcendental numbers; Cantor (1873) extended and greatly simplified this proof.

[15] The concept that many points existed between rational numbers, such as the square root of 2, was well known to the ancient Greeks.

In 1854 Bernhard Riemann highlighted the limitations of calculus in the method of Fourier series, showing the need for a rigorous definition of the real numbers.

Thirdly, these definitions imply quantification on infinite sets, and this cannot be formalized in the classical logic of first-order predicates.

Cantor's first uncountability proof was different from his famous diagonal argument published in 1891.

[19] Another approach is to start from some rigorous axiomatization of Euclidean geometry (say of Hilbert or of Tarski), and then define the real number system geometrically.

In fact the fundamental physical theories such as classical mechanics, electromagnetism, quantum mechanics, general relativity, and the standard model are described using mathematical structures, typically smooth manifolds or Hilbert spaces, that are based on the real numbers, although actual measurements of physical quantities are of finite accuracy and precision.

Physicists have occasionally suggested that a more fundamental theory would replace the real numbers with quantities that do not form a continuum, but such proposals remain speculative.

Instead, computers typically work with finite-precision approximations called floating-point numbers, a representation similar to scientific notation.

Alternately, computer algebra systems can operate on irrational quantities exactly by manipulating symbolic formulas for them (such as