Rule of succession

In probability theory, the rule of succession is a formula introduced in the 18th century by Pierre-Simon Laplace in the course of treating the sunrise problem.

More abstractly: If X1, ..., Xn+1 are conditionally independent random variables that each can assume the value 0 or 1, then, if we know nothing more about them, Since we have the prior knowledge that we are looking at an experiment for which both success and failure are possible, our estimate is as if we had observed one success and one failure for sure before we even started the experiments.

Nevertheless, if we had not known from the start that both success and failure are possible, then we would have had to assign But see Mathematical details, below, for an analysis of its validity.

get more and more similar, which is intuitively clear: the more data we have, the less importance should be assigned to our prior information.

Laplace used the rule of succession to calculate the probability that the Sun will rise tomorrow, given that it has risen every day for the past 5000 years.

One obtains a very large factor of approximately 5000 × 365.25, which gives odds of about 1,826,200 to 1 in favour of the Sun rising tomorrow.

However, as the mathematical details below show, the basic assumption for using the rule of succession would be that we have no prior knowledge about the question whether the Sun will or will not rise tomorrow, except that it can do either.

Laplace knew this well, and he wrote to conclude the sunrise example: "But this number is far greater for him who, seeing in the totality of phenomena the principle regulating the days and seasons, realizes that nothing at the present moment can arrest the course of it.

[2] In the 1940s, Rudolf Carnap investigated a probability-based theory of inductive reasoning, and developed measures of degree of confirmation, which he considered as alternatives to Laplace's rule of succession.

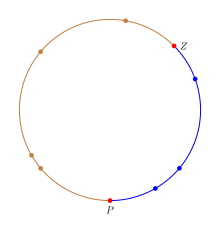

This provides an exact mapping from success/failure experiments with probability of success p to uniformly random points on the circle.

In the figure the success fraction is colored blue to differentiate it from the rest of the circle and the points P and Z are highlighted in red.

The proportion p is assigned a uniform distribution to describe the uncertainty about its true value.

Suppose these Xs are conditionally independent given p. We can use Bayes' theorem to find the conditional probability distribution of p given the data Xi, i = 1, ..., n. For the "prior" (i.e., marginal) probability measure of p we assigned a uniform distribution over the open interval (0,1) For the likelihood of a given p under our observations, we use the likelihood function where s = x1 + ... + xn is the number of "successes" and n is the number of trials (we are using capital X to denote a random variable and lower-case x as the data actually observed).

Putting it all together, we can calculate the posterior: To get the normalizing constant, we find (see beta function for more on integrals of this form).

This means that we cannot use this form of the posterior distribution to calculate the probability of the next observation succeeding when s = 0 or s = n. This puts the information contained in the rule of succession in greater light: it can be thought of as expressing the prior assumption that if sampling was continued indefinitely, we would eventually observe at least one success, and at least one failure in the sample.

To evaluate the "complete ignorance" case when s = 0 or s = n can be dealt with, we first go back to the hypergeometric distribution, denoted by

is: An approximate analytical expression for large N is given by first making the approximation to the product term: and then replacing the summation in the numerator with an integral The same procedure is followed for the denominator, but the process is a bit more tricky, as the integral is harder to evaluate where ln is the natural logarithm plugging in these approximations into the expectation gives where the base 10 logarithm has been used in the final answer for ease of calculation.

In passing to the limit of infinite N (for the simpler analytic properties) we are "throwing away" a piece of very important information.

The corresponding results are found for the s=n case by switching labels, and then subtracting the probability from 1.

This section gives a heuristic derivation similar to that in Probability Theory: The Logic of Science.

Thus, the way to proceed from here is very carefully, and to re-derive the results from first principles, rather than to introduce an intuitively sensible generalisation.

Then the joint posterior distribution of the probabilities p1, ..., pm is given by: To get the generalised rule of succession, note that the probability of observing category i on the next observation, conditional on the pi is just pi, we simply require its expectation.

The result, using the properties of the Dirichlet distribution is: This solution reduces to the probability that would be assigned using the principle of indifference before any observations made (i.e. n = 0), consistent with the original rule of succession.

This indicates that mere knowledge of more than two outcomes we know are possible is relevant information when collapsing these categories down to just two.

In the generalisation section, it was noted very explicitly by adding the prior information Im into the calculations.

Thus, when all that is known about a phenomenon is that there are m known possible outcomes prior to observing any data, only then does the rule of succession apply.

If the rule of succession is applied in problems where this does not accurately describe the prior state of knowledge, then it may give counter-intuitive results.

In principle (see Cromwell's rule), no possibility should have its probability (or its pseudocount) set to zero, since nothing in the physical world should be assumed strictly impossible (though it may be)—even if contrary to all observations and current theories.

Indeed, Bayes rule takes absolutely no account of an observation previously believed to have zero probability—it is still declared impossible.

In fact Larry Bretthorst shows that including the possibility of "something else" into the hypothesis space makes no difference to the relative probabilities of the other hypothesis—it simply renormalises them to add up to a value less than 1.