Oversampled binary image sensor

[1][2] Each pixel in the sensor has a binary response, giving only a one-bit quantized measurement of the local light intensity.

The response function of the image sensor is non-linear and similar to a logarithmic function, which makes the sensor suitable for high dynamic range imaging.

[1] Before the advent of digital image sensors, photography, for the most part of its history, used film to record light information.

At the heart of every photographic film are a large number of light-sensitive grains of silver-halide crystals.

In the subsequent film development process, exposed grains, due to their altered chemical properties, are converted to silver metal, contributing to opaque spots on the film; unexposed grains are washed away in a chemical bath, leaving behind the transparent regions on the film.

Thus, in essence, photographic film is a binary imaging medium, using local densities of opaque silver grains to encode the original light intensity information.

Thanks to the small size and large number of these grains, one hardly notices this quantized nature of film when viewing it at a distance, observing only a continuous gray tone.

The oversampled binary image sensor is reminiscent of photographic film.

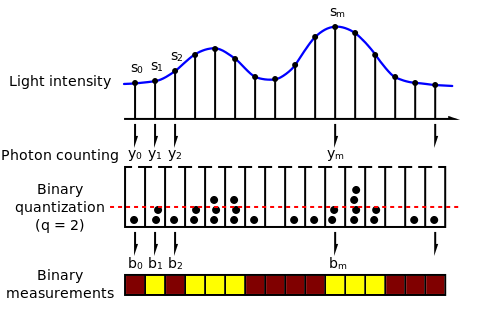

Each pixel in the sensor has a binary response, giving only a one-bit quantized measurement of the local light intensity.

A pixel is then set to 1 if the number of photons reaching it during the exposure is at least equal to a given threshold q.

Intuitively, one can exploit this spatial redundancy to compensate for the information loss due to one-bit quantizations, as is classic in oversampling delta-sigma converters.

The miniaturization of camera systems calls for the continuous shrinking of pixel sizes.

At a certain point, however, the limited full-well capacity (i.e., the maximum photon-electrons a pixel can hold) of small pixels becomes a bottleneck, yielding very low signal-to-noise ratios (SNRs) and poor dynamic ranges.

By assuming that light intensities remain constant within a short exposure period, the field can be modeled as only a function of the spatial variable

gets filtered by the lens, which acts like a linear system with a given impulse response.

the point spread function (PSF) of the optical system, cannot be a Dirac delta, thus, imposing a limit on the resolution of the observable light field.

[9] As a result, even if the lens is ideal, the PSF is still unavoidably a small blurry spot.

In optics, such diffraction-limited spot is often called the Airy disk,[9] whose radius

, each pixel (depicted as "buckets" in the figure) collects a different number of photons hitting on its surface.

can be modeled as realizations of a Poisson random variable, whose intensity parameter is equal to

If it is allowed to have temporal oversampling, i.e., taking multiple consecutive and independent frames without changing the total exposure time

This is quite important, since technology usually gives limitation on the size of the pixels and the exposure time.

For the oversampled binary image sensor, the dynamic range is not defined for a single pixel, but a group of pixels, which makes the dynamic range high.

[2] One of the most important challenges with the use of an oversampled binary image sensor is the reconstruction of the light intensity

4 shows the results of reconstructing the light intensity from 4096 binary images taken by single photon avalanche diodes (SPADs) camera.

[10] A better reconstruction quality with fewer temporal measurements and faster, hardware friendly implementation, can be achieved by more sophisticated algorithms.