Artificial intelligence art

[1] During the AI boom of the 2020s, text-to-image models such as Midjourney, DALL-E, Stable Diffusion, and FLUX.1 became widely available to the public, allowing non-artists to quickly generate imagery with little effort.

[2][3] Commentary about AI art in the 2020s has often focused on issues related to copyright, deception, defamation, and its impact on more traditional artists, including technological unemployment.

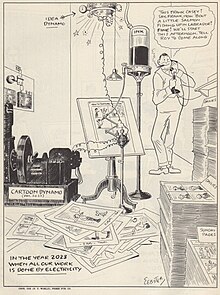

The concept of automated art dates back at least to the automata of ancient Greek civilization, where inventors such as Daedalus and Hero of Alexandria were described as having designed machines capable of writing text, generating sounds, and playing music.

[4][5] Early experiments were driven by the idea that computers, beyond performing logical operations, could generate aesthetically pleasing works, offering a new dimension to creativity.

[6] Along with this, Ada Lovelace, typically known for her work on the analytical engine, in her notes, begins to conceptualize the idea "computing operations" could be used to generate music and poems.

This concept resulted in what is now referred to as "The Lovelace Effect," which gives a concrete set of tools to analyze situations where a computer's behavior is viewed by users as creative.

[9] Shortly after, the academic discipline of artificial intelligence was founded at a research workshop at Dartmouth College in 1956 and has experienced several waves of advancement and optimism in the decades since.

[10] Since its founding, researchers in the field have raised philosophical and ethical arguments about the nature of the human mind and the consequences of creating artificial beings with human-like intelligence; these issues have previously been explored by myth, fiction, and philosophy since antiquity.

[14] AARON uses a symbolic rule-based approach to generate technical images in the era of GOFAI programming, and it was developed by Cohen with the goal of being able to code the act of drawing.

Deep learning, characterized by its multi-layer structure that attempts to mimic the human brain, first came about in the 2010s and causing a significant shift in the world of AI art.

[31] During the deep learning era, there are mainly these types of designs for generative art: autoregressive models, diffusion models, GANs, normalizing flows.In 2014, Ian Goodfellow and colleagues at Université de Montréal developed the generative adversarial network (GAN), a type of deep neural network capable of learning to mimic the statistical distribution of input data such as images.

[32] Unlike previous algorithmic art that followed hand-coded rules, generative adversarial networks could learn a specific aesthetic by analyzing a dataset of example images.

[41] In 2018, an auction sale of artificial intelligence art was held at Christie's in New York where the AI artwork Edmond de Belamy (a pun on Goodfellow's name) sold for US$432,500, which was almost 45 times higher than its estimate of US$7,000–10,000.

[47] A year later, in 2019, Stephanie Dinkins won the Creative Capital award for her creation of an evolving artificial intelligence based on the "interests and culture(s) of people of color.

Variables, including classifier-free guidance (CFG) scale, seed, steps, sampler, scheduler, denoise, upscaler, and encoder, are sometimes available for adjustment.

AI has the potential for a societal transformation, which may include enabling the expansion of noncommercial niche genres (such as cyberpunk derivatives like solarpunk) by amateurs, novel entertainment, fast prototyping,[88] increasing art-making accessibility,[88] and artistic output per effort and/or expenses and/or time[88]—e.g., via generating drafts, draft-refinitions, and image components (inpainting).

[88] Harvard Kennedy School researchers voiced concerns about synthetic media serving as a vector for political misinformation soon after studying the proliferation of AI art on the X platform.

For example, Lensa, an AI app that trended on TikTok in 2023, was known to lighten black skin, make users thinner, and generate hypersexualized images of women.

[102] Melissa Heikkilä, a senior reporter at MIT Technology Review, shared the findings of an experiment using Lensa, noting that the generated avatars did not resemble her and often depicted her in a hypersexualized manner.

[103] Experts suggest that such outcomes can result from biases in the datasets used to train AI models, which can sometimes contain imbalanced representations, including hypersexual or nude imagery.

[111] In 1985, intellectual property law professor Pamela Samuelson argued that US copyright should allocate algorithmically generated artworks to the user of the computer program.

[113] In 2022, coinciding with the rising availability of consumer-grade AI image generation services, popular discussion renewed over the legality and ethics of AI-generated art.

[115][116] In December 2022, users of the portfolio platform ArtStation staged an online protest against non-consensual use of their artwork within datasets; this resulted in opt-out services, such as "Have I Been Trained?"

[125] A tool built by Simon Willison allowed people to search 0.5% of the training data for Stable Diffusion V1.1, i.e., 12 million of the 2.3 billion instances from LAION 2B.

[132] Artist Sarah Andersen, who previously had her art copied and edited to depict Neo-Nazi beliefs, stated that the spread of hate speech online can be worsened by the use of image generators.

[134]After winning the 2023 "Creative" "Open competition" Sony World Photography Awards, Boris Eldagsen stated that his entry was actually created with artificial intelligence.

[148] As generative AI image software such as Stable Diffusion and DALL-E continue to advance, the potential problems and concerns that these systems pose for creativity and artistry have risen.

"[126] A 2022 case study found that AI-produced images created by technology like DALL-E caused some traditional artists to be concerned about losing work, while others use it to their advantage and view it as a tool.

[126][151] For example, Polish digital artist Greg Rutkowski has stated that it is more difficult to search for his work online because many of the images in the results are AI-generated specifically to mimic his style.

[154] Within the same vein, Disney released Secret Invasion, a Marvel TV show with an AI-generated intro, on Disney+ in 2023, causing concern and backlash regarding the idea that artists could be made obsolete by machine-learning tools.

1960's art of cow getting abducted by UFO in midwest