Order statistic

For example, suppose that four numbers are observed or recorded, resulting in a sample of size 4.

The first order statistic (or smallest order statistic) is always the minimum of the sample, that is, where, following a common convention, we use upper-case letters to refer to random variables, and lower-case letters (as above) to refer to their actual observed values.

Similarly, for a sample of size n, the nth order statistic (or largest order statistic) is the maximum, that is, The sample range is the difference between the maximum and minimum.

The sample median may or may not be an order statistic, since there is a single middle value only when the number n of observations is odd.

When the random variables X1, X2, ..., Xn form a sample they are independent and identically distributed.

In general, the random variables X1, ..., Xn can arise by sampling from more than one population.

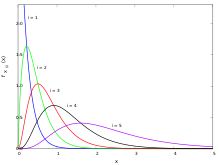

The corresponding probability density function may be derived from this result, and is found to be Moreover, there are two special cases, which have CDFs that are easy to compute.

is equal to[3] that is, the kth order statistic of the uniform distribution is a beta-distributed random variable.

This equals (refer to multinomial distribution for details) and the result follows.

One reasons in an entirely analogous way to derive the higher-order joint distributions.

It is also related with another particularity of order statistics of uniform random variables: It follows from the BRS-inequality that the maximum expected number of uniform U(0,1] random variables one can choose from a sample of size n with a sum up not exceeding

a random sample of size n from an exponential distribution with parameter λ, the order statistics X(i) for i = 1,2,3, ..., n each have distribution where the Zj are iid standard exponential random variables (i.e. with rate parameter 1).

[5][6] The Laplace transform of order statistics may be sampled from an Erlang distribution via a path counting method [clarification needed].

, and we can use the substitutions and to derive the following probability density functions for the order statistics of a sample of size n drawn from the distribution of X: An interesting question is how well the order statistics perform as estimators of the quantiles of the underlying distribution.

In that case, the sample median is usually defined as the midpoint of the interval delimited by the 3rd and 4th order statistics.

For the uniform distribution, as n tends to infinity, the pth sample quantile is asymptotically normally distributed, since it is approximated by For a general distribution F with a continuous non-zero density at F −1(p), a similar asymptotic normality applies: where f is the density function, and F −1 is the quantile function associated with F. One of the first people to mention and prove this result was Frederick Mosteller in his seminal paper in 1946.

[8] Further research led in the 1960s to the Bahadur representation which provides information about the errorbounds.

In this case, the sample mean, by the central limit theorem, is also asymptotically normally distributed, but with variance σ2/n instead.

This asymptotic analysis suggests that the mean outperforms the median in cases of low kurtosis, and vice versa.

It can be shown that where with Zi being independent identically distributed exponential random variables with rate 1.

Since X/n and Y/n are asymptotically normally distributed by the CLT, our results follow by application of the delta method.

For discrete random variables, the equality need not to hold and we only have The mutual information between uniform order statistics is given by where where

Moments of the distribution for the first order statistic can be used to develop a non-parametric density estimator.

This equation in combination with a jackknifing technique becomes the basis for the following density estimation algorithm, In contrast to the bandwidth/length based tuning parameters for histogram and kernel based approaches, the tuning parameter for the order statistic based density estimator is the size of sample subsets.

Such an estimator is more robust than histogram and kernel based approaches, for example densities like the Cauchy distribution (which lack finite moments) can be inferred without the need for specialized modifications such as IQR based bandwidths.

This is because the first moment of the order statistic always exists if the expected value of the underlying distribution does, but the converse is not necessarily true.

order statistics, three values are first needed, namely The cumulative distribution function of the

In many applications all order statistics are required, in which case a sorting algorithm can be used and the time taken is O(n log n).

Order statistics have a lot of applications in areas as reliability theory, financial mathematics, survival analysis, epidemiology, sports, quality control, actuarial risk, etc.

There is an extensive literature devoted to studies on applications of order statistics in these fields.