Static random-access memory

[2][3] SRAM was the main driver behind any new CMOS-based technology fabrication process since the 1960s, when CMOS was invented.

[4] In 1964, Arnold Farber and Eugene Schlig, working for IBM, created a hard-wired memory cell, using a transistor gate and tunnel diode latch.

[5][6] In 1965, Benjamin Agusta and his team at IBM created a 16-bit silicon memory chip based on the Farber-Schlig cell, with 84 transistors, 64 resistors, and 4 diodes.

Since SRAM requires more transistors per bit to implement, it is less dense and more expensive than DRAM and also has a higher power consumption during read or write access.

[11] Many categories of industrial and scientific subsystems, automotive electronics, and similar embedded systems, contain SRAM which, in this context, may be referred to as ESRAM.

It is much easier to work with than DRAM as there are no refresh cycles and the address and data buses are often directly accessible.

nvSRAMs are used in a wide range of situations – networking, aerospace, and medical, among many others[18] – where the preservation of data is critical and where batteries are impractical.

Asynchronous SRAM was used as main memory for small cache-less embedded processors used in everything from industrial electronics and measurement systems to hard disks and networking equipment, among many other applications.

Synchronous memory interface is much faster as access time can be significantly reduced by employing pipeline architecture.

[26] The principal drawback of using 4T SRAM is increased static power due to the constant current flow through one of the pull-down transistors (M1 or M2).

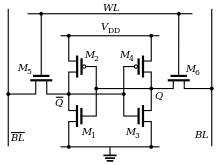

Although it is not strictly necessary to have two bit lines, both the signal and its inverse are typically provided in order to improve noise margins and speed.

During read accesses, the bit lines are actively driven high and low by the inverters in the SRAM cell.

The symmetric structure of SRAMs also allows for differential signaling, which makes small voltage swings more easily detectable.

Another difference with DRAM that contributes to making SRAM faster is that commercial chips accept all address bits at a time.

This is similar to applying a reset pulse to an SR-latch, which causes the flip flop to change state.

This works because the bit line input-drivers are designed to be much stronger than the relatively weak transistors in the cell itself so they can easily override the previous state of the cross-coupled inverters.

Besides issues with size a significant challenge of modern SRAM cells is a static current leakage.

The cell power drain occurs in both active and idle states, thus wasting useful energy without any useful work done.

[4] With these two issues it became more challenging to develop energy-efficient and dense SRAM memories, prompting semiconductor industry to look for alternatives such as STT-MRAM and F-RAM.

[33] It was based on fully depleted silicon on insulator-transistors (FD-SOI), had two-ported SRAM memory rail for synchronous/asynchronous accesses, and selective virtual ground (SVGND).

The study claimed reaching an ultra-low SVGND current in a sleep and read modes by finely tuning its voltage.